Scalability: Horizontal and Vertical Scaling

Also covers Load Balancing, Caching and Replication

I took down important points from CS75 Lecture 9 on Scalability. It packed lots of tiny informative details into the big picture. So made it into a blog post.

Here is the awesome original lecture.

What features do you expect or should you consider from web hosting companies?

Is it blocked in different regions based on IP?

SFTP vs FTP: The "S" stands for "Secure". This doesn't matter for public files but is essential for credentials.

Are resources in shared hosts between a lot of different people/users?

Alternatives to web hosting companies?

A Virtual Private Server (VPS) gives you your operating system filesystem, allowing you to run multiple virtual machines from a single host. This allows you to take a single slice of hardware and make the most of it.

Examples include DreamHost, GoDaddy, Linode, and Amazon Web Services EC2.

A single core can only do one task at a time, such as one web request. Multiple processes or threads only give the impression that multiple tasks can be done simultaneously. Dual-core or quad core can do that many tasks at once.

Vertical scaling

TLDR - You have to scale and increase capacity. The easiest way to do this is to get better or more machines/hardware, which is referred to as vertical scaling.

Cons

Hardware has limits

Single point of failure

Costlier to setup state of the art hardware

Pros

Easier to understand (maybe not)

Data consistency

A SATA drive typically has a speed of 70 to 100 RPM, whereas a SAS drive is much faster, running at 15000 RPM. Usually, databases with more read/write operations are installed on SAS drives. SSDs are even faster, as they lack any moving parts.

Horizontal Scaling

TLDR - Rather than getting a few high-performing machines, get a larger number of decent ones.

Load Balancing

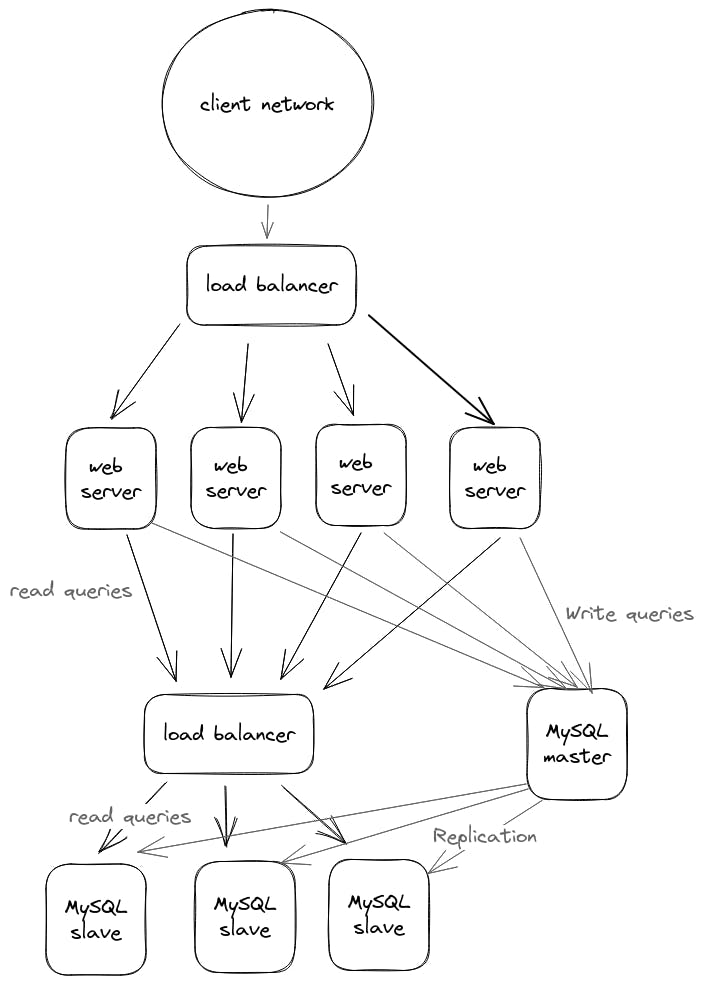

We need a load balancer when we use Horizontal scaling. It will spread incoming requests over the different machines.

The load balancer's goal is to send requests to the least busy server (or the same server, depending on the algorithm) to share the workload of all the servers.

Some more nice things about load balancers

The load balancer returns an IP address, so the backend servers can have a private IP address.

No one outside the network can access these private servers. This provides both privacy and security, and it prevents people from trying to SSH or perform a DOS attack on particular sites in the network without going through the load balancer.

IPv4 addresses are limited, so having private addresses means more addresses are available.

Load balancers can be implemented at both software and hardware levels.

Software level implementations include Elastic Load Balancer (AWS), HaProxy, and Linux Virtual Server (LVS). Hardware implementations, which are more expensive, include Barracuda, Cisco, Citrix, and F5.

Coming back to Horizontal Scaling,

If the same thing is stored in multiple places it's unnecessary.

If different things are hosted on different servers, such as images on one and HTML on another, then the server requests accordingly. A load balancer can be used for this.

Round Robin load balancing rotates the IP list, but it's not very intelligent.

DNS responses are cached which can cause bad load. TTL stands for "time to live" and is used for the DNS cache.

When we scale horizontally, sessions and cookies can become an issue. If the backend servers are in PHP, load balancing will break session cookies. This is because they are stored in the /temp directory of the Linux filesystem. As a result, if the load balancer switches you between systems, you will be logged out since the session cookie won't be in sync.

We can store the address of server ( through cookies ) so next time the user visits the website, his request will be served by the same backend server. But the downside of storing the private IP directly like this is that the IP (even though it’s private ) is visible to the rest of the world. Attackers can gain access to certain servers through spoofing.

An alternative is to store a random number and allow the load balancer to keep track of which number corresponds to each server, this way user is always redirected to the same server in one session. Can also be done using the IP Hash method.

Caching

PHP Acceleration - Compiler files, so PHP compiles files into .exe which can be used for this request and thrown away, but this can be kept for the next request as well as long as the file doesn’t change. Python has the same with .pyc files.

Serving this on a large scale could be risky, but it would be beneficial where the number of reads is much higher than the number of writes, such as on Craigslist, which serves HTML files. Servers are extremely fast at serving static HTML content, which is essentially caching.

MVC enables us to use templates to make easy modifications, while raw HTML would make restructuring much more challenging.

MySQL enables query caching through a setting called the MySQL Query Cache.

Memcached is an in-memory cache that runs on a server, either the same as or different from the web server. It stores the cache in RAM.

In terms of speed, Read write from disc << (Use in server) MySQL << cache < Memcached

Reading from a disc is slow, due to the need to load it and other tasks. To get faster results, you can use a MySQL server, which is constantly running and indexes the data. Caching those queries will make it faster, and Memcached can further increase the speed.

We could run out of Memcached space due to its limited capacity. To prevent this, we could implement a garbage collection system to expire unused objects in the cache. This system could be based on when the objects were last used.

Facebook used memcache because it was read-heavy early in its time.

Storage Engines

InnoDB - storage engines ( default ), supports transactions

MyISAM - Supports locks not transactions

Archive tables are slower to query but are compressed, used in log files, and not many selects on them. Long term disc saving over performance

Replication

Master-Slave in databases

Queries are executed in the master server and then replicated to the slave server.

If the master server fails, there is an identical backup.

Redundant data storage is used.

Is load balancing over the slave servers a good topology for services like Facebook, which was more read-heavy in its early days?

Writes are done to the master server, and reads are done through the slaves.

There is a single point of failure for writes—the master server.

Master-Master in databases

Writes can be done to either master server and the query will be replicated to the other.

Reads can be done through either server.

Combining Parts of both we get something like this

Combining all these concepts

However, there is still a single point of failure in the load balancer. So we can use two load balancers in an active-active mode (which translates to master-master).

Multiple load balancers → Active - Active load balancers are there, when one load balancer (LB2) stops replying ( like a hearbeat which means packets ), the other one (LB1) assumes that LB2 is passive now and promotes itself to do all the work and takes over the IP

.

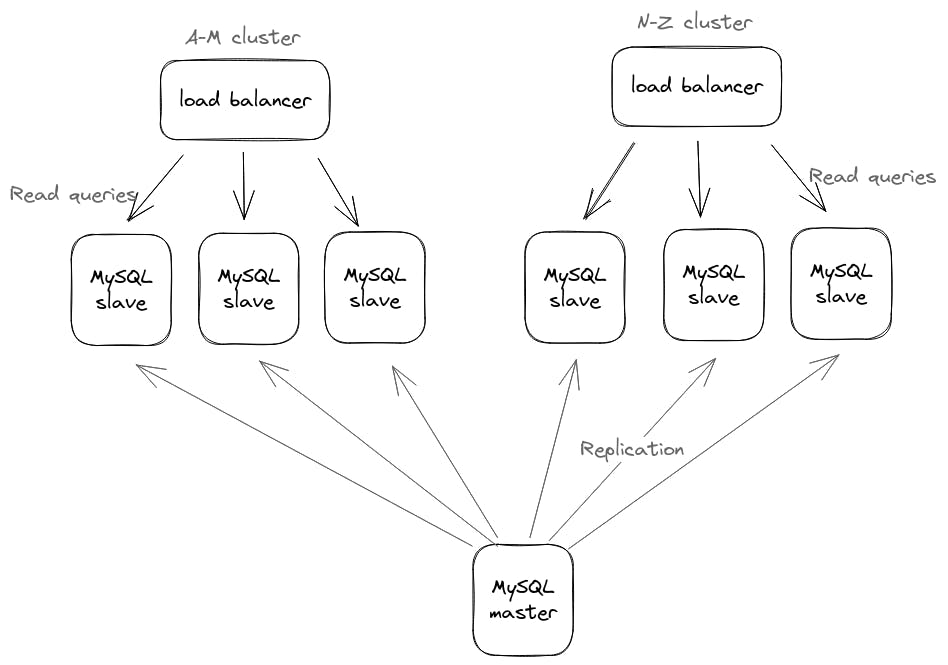

In the early days, Facebook had two separate databases and files for harvard.facebook.com and mit.facebook.com. This was an example of partitioning. When Boston University joined, a third server was needed. Initially, users could only access their own network (e.g. only their own Facebook).

Partitioning could be based on different characteristics like names and other details. For example, A-L would be in database 1 and M-Z in database 2.

High Availability - A pair of servers are used to monitor each other's heartbeats and are prepared to take over in case of failure.

Global Load Balancing can be based on regional availability.

What kind of data do you want to allow into data center / network ?

TCP Port 80 is used for HTTP

TCP Port 443 is used for HTTPS, only until the load balancer

If Port 22 is blocked, then you lose SSH access; you must use an SSL-based VPN and then SSH

Once inside the load balancer, encryption is offloaded, so SSL is done at the load balancer level

If there is a firewall, traffic around TCP 3306 should not be exposed - use the Principle of Least Privilege and have a firewall to allow only certain ports.

If you reached the end then cheers,thanks for reading !! ( this was my first ever blog post )